What is virtual production?

9minutes read

05/12/2021

Virtual production is one of the most important innovations since digital cameras replaced traditional film stock. It is being used for all kinds of content creation and it’s changing production processes forever. Let's take a look at some of the ways virtual production has been used in recent years and how real-time production is affecting the production pipeline.

What is virtual production?

Virtual production allows real-world and digital platforms to converge using a game engine to create virtual sets and environments during filmmaking. Virtual production allows for more flexibility and creativity in filmmaking, as it eliminates the need for physically building every set or flying crew to physical locations. It also allows for some of the filmmaking processes to take place virtually, allowing people to work remotely.

Some examples of virtual production include world capture (location/set scanning and digitization), visualization (previs, techvis, postvis), performance capture (mocap, volumetric capture), simulcam (on-set visualization), and in-camera visual effects (ICVFX).

One of the most well-known methods of virtual production involves creating a real-time rendered scene using a game engine that surrounds physical props and actors via an LED wall replacing traditional green screens.

When a live-action camera moves, the virtual environment on the LED wall shifts perspective as well, using gaming technologies to navigate a virtual landscape. The unison of movement between camera and imagery is called parallax, creating the illusion of a single physical location. Live camera tracking translates accurate camera movements into the rendering platforms where they are realized in real-time.

The Mandalorian set. Credit: Industrial Light & Magic

The Mandalorian set. Credit: Industrial Light & Magic

The rise of virtual production

Epic Games Unreal Online Learning Producer and CG Spectrum Mentor of virtual production Deepak Chetty has developed all kinds of innovative content during his career as a director, cinematographer, and VFX professional. He has worked on films, advertising, and virtual events such as the GCDS fashion show for Milan Fashion Week.

For several years now, Chetty's been at the forefront of helping traditional filmmakers and content creators transition their skills to creating content entirely inside of Unreal Engine.

As Epic Games Unreal Online Learning Producer, he works with industry authors to create the latest in Epic-approved online training materials for the use of UE in film, television, and virtual production.

Chetty is also helping develop an innovative new Real-time 3D and Virtual Production Course at CG Spectrum (an Unreal Academic Partner) to help equip traditional content creators with knowledge of the engine and surrounding pipelines including basic rigging and animation, how to build film quality environments, lighting, and atmospherics, plus how to pair these skills with their real-world equivalents for roles on a stage volume.

Virtual production is not new, it’s just been democratized. -Deepak Chetty

Chetty says there are more opportunities than ever for individuals to create incredible content on their computers at home. They now have the power and tools to create visuals that may have previously required a large crew and a million-dollar budget.

What is The Volume in virtual production?

Virtual production has led to a new stage known as The Volume, a modular fixture made up of thousands of LED lights used to display video content from a game engine, curving around actors and smaller sets.

Initially intended for indoor/outdoor advertising, entertainment venues, and broadcast use, LED panels are now employed on film sets to create volumes (backdrops) for motion picture cinematography.

Thor IV volume set. (Credit: The Hollywood Reporter)

The automation of the Volume stage means that production times and set-ups are being greatly reduced or even circumvented. A tool called Stage Manager allows presets for locations, camera angles, and lighting set-ups that would normally take hours to establish on set. This can all be done via switchboard, allowing for instant set-up changes and tracking scenes and version numbers, all operating as multi-user sessions in separate virtual workspaces.

The Volume allows for live in-camera set extensions, crowd duplication, green screen plus a number of other features.

Virtual production innovators Stargate Studios’ custom stages use True View technologies and offer scalable studio design with adjustable walls, different resolution screens, interactive lighting that is tied to the screens, and different types of tracking depending on interiors or exteriors.

There are currently around 100 LED stage volumes around the world, with at least 150 more in the works.

What are virtual production workflows?

The narrative options tools like Unreal Engine or Unity offer are changing technologies. The advent of the virtual design process has also introduced new asset departments and virtual art departments, requiring the diverse skills of a real-time 3D artist.

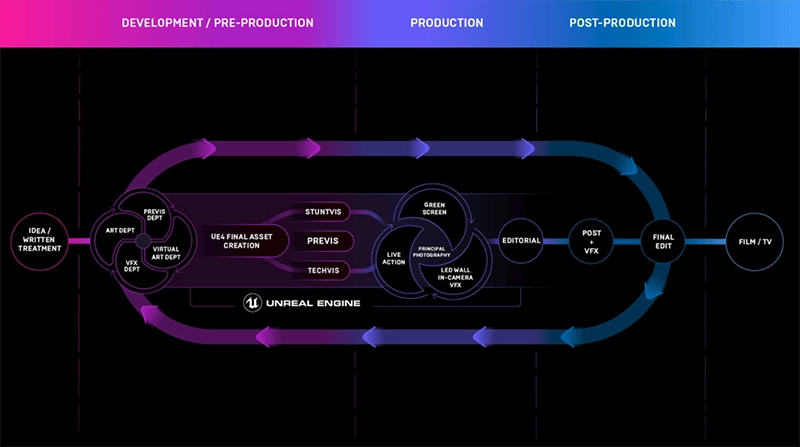

Virtual production pipeline (Source: Unreal Engine)

Virtual production pipeline (Source: Unreal Engine)

The emphasis is not so much on the technology but on how it is affecting content creation, changing workflows, and enhancing collaboration.

Along with the LED wall process, virtual production can include elements of chroma key effects, performance capture, and rear projection. Complete virtual production was used in the 2019 photorealistic remake of The Lion King.

Key creatives are now influencing sets in ways they never have before.

The real-time feedback loop allows for creative participation in real-time, expanding the limits of pre-vis by rendering final pixels and photorealism in-camera with little to no post-augmentation required. Directors, DPs, set decorators, and set designers are required to use the real-time virtual production workflows in high definition.

[featured]

Different workflows for different departments have been established to influence production such as virtual pre-lights, virtual blocking, and virtual location scouts. Using virtual production workflows, crews are able to solve complex issues in creative ways in pre-production rather than in post.

Real time tools like Unity or Unreal Engine create collaborative on-set experiences that allow filmmakers to work differently.

Pre-vis has evolved as collaboration in real-time. It now encompasses post-vis, tech-vis, motion capture volumes, spaces, virtual prototyping, photogrammetry, and virtual LED screens producing LED stage volumes. These virtual production tools are also being used for concept design, proof of concept, and animatics.

Whereas pre-vis breathes life into characters through animation, virtual production entails rendering and lighting on set and the focus is on the shot. Render times have been sped up to the point where set lighting can be done on location. This means the pipelines have been changed, and production and VFX pipelines occur concurrently.

Adapting to virtual production innovation

While some traditional VFX artists have embraced the benefits of virtual production, others are having a hard time moving into live-action production on set. They are used to working in isolation in a dark room and not in the dynamic atmosphere of a set.

In the case of a colorist, they have to work with 3 da Vinci resolves operating live over 40 monitors and four cameras in real-time. Notes also need to be resolved in real-time by up to 10 people, requiring teams of operators to work on these while filming. Tools are being developed to capture notes from all departments so they can be dealt with efficiently.

Studios are wrapping their heads around how to re-use virtual assets for future productions.

Worldbuilding allows for a multitude of locations that can be re-textured and re-purposed for different productions. As asset libraries grow, studios and creators can choose to further monetize their assets by selling them on Unreal Marketplace.

Art departments now have to consider lighting in virtual production pipelines. Directors of Photography (DPs) are brought in to work with virtual crews for pre-lights, choosing frames and sequences. Then a tech visit is done on the assets so they know what the DP wants and what it’s going to look like on the shoot day.

Case study: How virtual production was used in The Mandalorian

In 1999 George Lucas was the first mainstream director to use high-definition digital cameras for Star Wars: Episode 1 The Phantom Menace. Since then, they have become the industry norm. The Star Wars franchise continues its legacy of VFX innovation with Industrial Light and Magic’s use of the Volume stage to produce high-end effects that enhance dynamic storytelling within a familiar universe that has been a part of popular culture for over 40 years.

In the making of The Mandalorian, immersive LED stages were used to create virtual three-dimensional environments that allowed director Jon Favreau to collaboratively create effects in-camera rather than in post-production. VFX artists were brought into the stage to collaborate with Favreau, the DOP, art departments, etc on the shoot.

Virtual backgrounds, physical set pieces and actors were blended seamlessly together to produce final pixels in real time.

They had three operators working various stations in what they called the ‘brain bar’ on set. One station controlled the master Unreal Engine set-up, it would record takes and the slate, and it would save data. Another station was dedicated to editing, and another was for compositing. The machines were hooked up to video feeds called the ‘video village’ where takes could be viewed as well as temp edits and composites.

Production Designer Andrew Jones had to produce the digital sets in the same timeline as the physical sets, giving him the flexibility of deciding which elements worked better virtually or practically. This allowed him to work with a unified vision of the final look rather than wait for the integration of post-production finishes.

The LED screens were used to light the scenes within the Volume stage. Powered by an iPad controller, they produced volumetric color correction, exposure control, time day, and lights that could be adjusted to any shape or size. The lighting system eliminated issues of chroma key spill, and overhead screens created final reflection effects and bounce on the metal suit of the main character, reducing the need for a slate of post effects.

Virtual production using Unreal Engine

Unreal Engine is the world’s most open and advanced real-time 3D creation software. It is more than an online tool; it is a move to get final pixels in-camera and on set. The process is reliant on real-time technology.

Unreal Engine virtual production is being used to create content for countless industries such as architecture, engineering, construction, games, film, television, automotive, broadcast, and live events, as well as training, simulation, manufacturing, and advertising.

In terms of gameplay (what Unreal was built for initially), these new technologies in filmmaking are influencing how games are being produced. The crossover between gaming and film works both ways. The concept of the avatar, which was created for game design, is now being used in features. Game developers are learning camera techniques used by DOPs during virtual production that will elevate the quality of gaming visuals.

As photorealistic technologies improve, both the film and game industries can use the same assets in all stages of production.

Training between gaming and filmmakers focuses on world building; which is what gaming engines such as Unreal and Unity do best. This allows filmmakers to film in different virtual locations just as they would in the physical world. Gameplay is designed to optimize the imagery of where you are in the game world. These principles can be applied to filmmaking. The entire virtual world is not polished to a final stage; there are tools to increase the resolution of the locations which make the final cut.

Gaming companies such as Epic have embraced the film industry; which is using game engines to augment and advance filmmaking techniques and practices more and more. Film productions now require final assets in real-time on a large scale.

Virtual production is changing the way films and television shows are created, allowing for greater flexibility and cost savings in the production process. And at CG Spectrum, our virtual production courses give students the chance to learn Unreal Engine with an experienced mentor.

As an Unreal Authorized Training Center, we offer a comprehensive curriculum that covers everything from previsualization and virtual cinematography to virtual production workflows and real-time animation and visual effects. Our mentors have years of experience in the industry and can provide personalized guidance as students master this cutting-edge technology that will help set them apart in today's rapidly evolving entertainment industry.

Learning virtual production for new filmmakers

More and more filmmakers are choosing virtual production for filmmaking due to its cost-effectiveness and convenience. It allows filmmakers to easily visualize and experiment with different elements of their film, such as sets and special effects, without having to physically construct or spend money on them, creating more access to filmmaking for people with limited resources.

Virtual production streamlines the filmmaking process, providing solutions and opportunities for budding filmmakers making low to no-budget short films. (High-end effects can be achieved using projectors instead of LED screens on a large stage.)

Josh Tanner, who was featured in the Unreal Engine Short Film Initiative, used traditional rear projection along with performance capture to make his sci-fi horror short Decommissioned (watch the making of Decommissioned). Pre-vis was created in Unreal Engine, and the footage was ingested into the engine where it was edited, finished, and output, exemplifying end-to-end workflow virtual production.

Student filmmakers are learning these new practices at the beginning of their careers, putting them at an advantage against their more experienced counterparts.

There is great opportunity for new filmmakers to take the lead in exploring the limits of virtual production and learning virtual production workflows as they cut their teeth on their early films.

In the same ways that digital cameras have simplified filmmaking and made it more affordable in the past 20 years, virtual production will further level the playing field for all practitioners. The need for location shoots can be bypassed; effects can be done in-camera; 10 hours of golden hour lighting can be shot in one day, and post-production pipelines can be reduced.

Using virtual production for live events

As well as screen production, virtual production is being used for installations and projections for events, marketing, and commercial purposes. Innovation is being explored in fashion shows, car launches, art spaces, exhibitions, concerts, broadcasting, architecture, etc. The possibilities are limitless, and they are being driven by creatives and client demands.

Virtual locations of games can be repurposed, with pre-existing character rigs used in conjunction with motion capture to create original content.

Rapper Travis Scott performed a live virtual concert within the game Fortnite, in a first-time event that used a game as a performance space.

The Weather Channel used virtual production technologies to show the potential frightening effects of Hurricane Florence which was approaching the Carolina coast.

The broadcast animated the water surge damage along with sound effects, demonstrating the risk to humans and the environment alike. The sequence provided powerful content that only virtual production can deliver.

Production company The Mill used motion capture and Unreal Engine to bring Apex Legends character Mirage to life during The Game Awards. An actor in a mo-cap suit performed as the game character, interacting with the host in real-time using virtual production technologies.

Fashion label GCDS launched a digital fashion show for Milan Fashion Week 2020 when COVID-19 restrictions shut down public events.

An avatar of singer Dua Lipa was in ‘attendance’ at the show, stamping her approval of her favorite label. Virtual production was used to create landscapes, models, audiences, and virtual versions of the clothing.

Want to work in virtual production? Get career training and mentorship from industry pros like Deepak!

If you're looking to learn about virtual production, take a look at CG Spectrum's Real-time 3D & Virtual Production Course. Learn worldbuilding, lighting, and camera essentials to create your very own film-quality cinematic shot using the Unreal Engine. You’ll also see how to apply these skills in the real world for job roles on a stage volume, so you can hit the ground running at your first virtual production job!

10 minute read

What is the Visual Effects Pipeline?

Plus artist interviews, free resources, career advice and special offers from CG Spectrum's film and game experts!

Recommended for you!

Free Visual Effects resources and articles to inspire you on your journey into the film and games industry.